There is good package design research and bad, but understanding the fundamentals ensures that the right kind of research is applied in the right way.

By Jonathan Asher

During the nearly 30 years I’ve been in this business (going from being the “research guy” at a design firm to the “design guy” at a research firm), I’ve seen research become a well-integrated part of the package design process. Most designers now are at least accepting of its use-and the more enlightened ones actually embrace it.

But there are still some designers who blatantly trash the use of research. I’m not sure if it’s out of concern over having their creativity “graded” or whether they’ve just had a few bad experiences (there can be a tendency to “shoot the messenger,” you know).

Admittedly, there is good research and bad research – just as there is good design and bad design. But it’s important to understand some fundamentals so that the right kind of research is applied in the right way or, at least, to inform a meaningful discussion to try to do so.

I thought it useful to share answers to some frequently asked questions about packaging research that I often provide designers, researchers, marketers, students, the media, and pretty much anyone who’ll listen.

Q. What's the difference between qualitative and quantitative research - and why would I use one over the other?

A: Essentially, qualitative research is a method of engaging with a few people in a detailed manner, with an opportunity for give and take, and spontaneity. This can be done with one person at a time (known as a one-on-one interview or an “IDI,” an in-depth interview); it can be done with two or three people at a time (known as diads or triads); or even six to 10 at a time (known as focus groups). These discussions can last anywhere from 30 minutes to two hours and are typically observed by interested parties from behind a one-way mirror.

This method is designed as a way to hear consumers speak in their own language, with the output used to guide next steps. Qualitative research is not meant to determine whether a design will perform well in market. It has no predictive abilities and no competent researcher would ever allow it to be used as such (and nor should any competent designer). The output is not “data” … the use of numbers in describing the outcome should be strictly avoided.

Qualitative research can be used prior to any design development to gain insights into current brand imagery, communications, personality, etc., and to uncover design equities (which elements are sacred and which are baggage?). This is extremely useful input for informing the design brief and keeps designers from “spinning their wheels” and going down misguided paths.

But qualitative research is most frequently used at the screening stage to explore a range of concepts and determine which are best meeting objectives and resonating most with consumers. The learning helps identify how best to move forward with a more focused number of designs (usually about two) and the effort required to optimize them.

Quantitative research differs from qualitative in that it engages a large number of people (typically a few hundred) in a highly structured manner. It provides an opportunity to make predictions about how something will perform over time, across a given population of people.

The interviews are conducted one-on-one and most often across a range of geographic locations. Interviewing can last up to 40 minutes and it can take up to four weeks to complete the required number of interviews.

While individual interviews can be observed, this is generally not done because it is disruptive to the process. It’s also because the responses heard from a few interviews in one location can be unrepresentative of those of the full sample, and therefore, they can be misleading in this out-of-context environment.

Quantitative research is mostly used at the late stages of the design process, once design concepts have been narrowed to just a few, to confirm which option is best to take to market. By using a good representation of the shelf on which the package will appear, predictive measures of visibility and shopping dynamics can be obtained.

Quantitative research can be conducted online (providing efficiencies in cost and timing), but there are many limitations with online testing that make it unsuitable as a method for validating package designs.

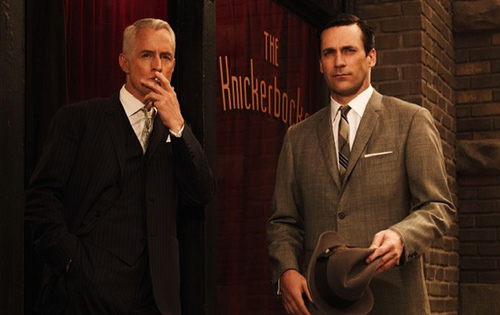

Focus groups have been around since the days of Mad Men, but many improvements have been made

Q: Focus groups have been around since the days of Mad Men - are there any new techniques to make them more useful?

A: While the overall structure and some of the techniques have been around for many years, many improvements have been made. These include:

- The screening of respondents (e.g., more representative of target consumers, weeding out “professional respondents”);

- The locations in which they’re conducted (e.g., vast and varied locales as needed to get to the right respondents vs. the most convenient or desirable);

- The types and manner in which questions are asked (indirect, projective, engaging, open ended, etc);

- And the means of moderating the group (e.g., ensure all are heard, no single respondent takes over, honest answers are gleaned, even for embarrassing subject matter, etc).

Specifically, regarding packaging research, PRS has evolved our qualitative methods to ensure that we obtain the most meaningful insights. This includes having a keen sensitivity to the types of information designers need and how they will apply the insights to their work, which helps frame our questions and, most importantly, influences our spontaneous follow-ups.

As part of our qualitative process, we show a representative shelf display (whenever possible) for contextual reference. In addition, we supplement our focus groups with eye tracking measures. This way, in addition to knowing whether an on-pack message is compelling, we’ll also know if it’s seen by shoppers. And we’ll know early enough in the process to give designers plenty of lead time to make improvements.

Q: A good designer just knows which design will work. Do we really need to waste time and money testing?

A: Good designers can create dozens of seemingly effective ways of meeting a design brief. And if these concepts are all distinctly different from one another (as they should be in the early stages), how will the selection of which one to pursue be made?

Does the designer get to pick his or her favorite? Marketers fear they would choose the wildest one that will look best in their portfolio but might scare off current users.

Does the vice president of marketing choose his or her favorite? That could be the safe, closest-to-the-current option, which may not be able to sufficiently move the needle.

In either case, it’s important to recognize that we – the designers, marketers and researchers involved in the project – are not the target market. And while we may be steeped in relevant knowledge and expertise, we are probably not best-suited to decide which design will perform most effectively among its intended audience, and within the market conditions it must thrive.

It’s amazing how the same people that claim NOT to have sufficient time or money to conduct research somehow are able to find even more time and money later on, after their best guess didn’t work out in the marketplace.

In short, research is an incredibly valuable insurance policy. Given the time, money and effort that goes into the entire design and fabrication of a new design, as well as the potential risk to the business of making a mistake-and even the opportunity cost of not choosing the best (albeit seemingly riskier) option-how can anyone afford to not invest just a little bit more to be sure they get it right?

Q: Isn't the only real test of how well a design will perform to place it on the shelf in stores and see how many people buy it?

A: Choosing the best means of assessing designs is a matter of balancing the trade-offs between approximating the realities of being in-market against the cost and timing required to do so.

There is no question that the most reliable means of determining how well a new design will perform in market is to print packages, place them on shelf and measure the sales. Of course, this is entirely prohibitive (in cost and time) to do-even in limited locations or for just one design; it is totally unrealistic to consider this approach for multiple design options.

In addition, this approach would only provide insight about how well the new design sells, but nothing about why – or why not.

The various research approaches that are available to validate designs prior to market introduction tend to offer various degrees of trading off between the two critical components (reality vs. investment). At times, a compromise is reached by using a laboratory setting but with live, 3D mock-ups instead of 2D photographs. While a bit more costly and time consuming to produce the stimuli (the research procedure and cost is the same), this approach is particularly useful in cases of structural packaging innovations or with certain colors, materials or printing approaches that are not fully realized in 2D.

Q: So how should research be used to ensure that the best designs get to market?

A: Research can be used at three points in the design process:

- Before design work begins (pre-design) to learn what is working and what isn’t, and to inform the design brief

- Early in the design process (screening) to help narrow the number of concepts and provide input for optimization

- Prior to market introduction (validation) to determine which design is the best to pursue

At each stage, there are several techniques that can be used to obtain actionable insights. Determining when in the process to conduct research and which type of method to employ will depend on several factors, including:

- How much and what type of information already exists

- What are the brand objectives (e.g., revive a dying brand or maintain leadership position, etc.)

- What are the sales objectives (e.g., attract new users or encourage increased purchases among existing users, etc.)

- What are the design objectives (e.g., strengthen overall visibility or enhance flavor selection, etc.)

- What are the logistical parameters (e.g., budget, timeline, etc)

The best course of action will be based on considering all of these factors and more. And the determination of which approach or approaches to use will be reached most efficiently through early planning discussions among the key process stakeholders: design, research and marketing.

Ultimately, the most effective use of research in the design process will be achieved through frequent, open and honest dialogue. That way, shoppers’ needs will be met, and everyone will win.

Jonathan Asher is Perception Research Services’ senior vice president. Reach him at jasher@prsresearch.com.

[Photos from Flickr users: hly0002 and pennycooper80]